How the Brain Senses Space: Visual and Auditory Representations and their Role in Cognition

Description

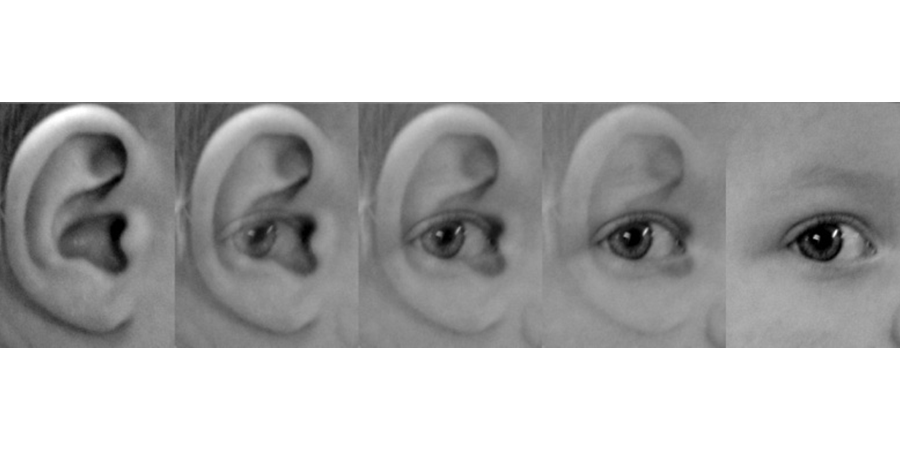

Sensing space – the world around us and our position in it – is one of the most important jobs the brain faces. Spatial information derives from many sources – vision, hearing, balance, touch, proprioception, movement and memory – and these systems must coordinate with each other to synthesize our spatial knowledge. I will describe some of the computations that are essential to knitting together visual and auditory information, which requires not only coordination across different reference frames but also different formats for encoding information (akin to digital vs. analog coding). Recent work in my laboratory suggests that this process begins in the ear itself via the brain’s ability to motorically control the mechanics of the eardrum. Finally, I will discuss the important role that spatial sensory and motor processing plays in supporting our abilities to think and remember.

Speaker Bio

Jennifer Groh is a Professor of Psychology & Neuroscience and Neurobiology and in the Center for Cognitive Neuroscience at Duke University, where she was formerly co-director of the Duke Institute for Brain Sciences. She is the author of the book Making Space: How the Brain Knows Where Things Are (Harvard University Press, 2014), which was named “Best of the Best of the University Presses” by the American Library Association. Her NIH-funded research focuses on what algorithms the brain employs to link visual and auditory space, and how these algorithms are implemented across different structures in the brain. She is the author of numerous scientific publications at the intersection of computational and systems neuroscience, and she has won a John Simon Guggenheim Fellowship for her work.