Testing and improving primate ventral stream models of core object recognition

Description

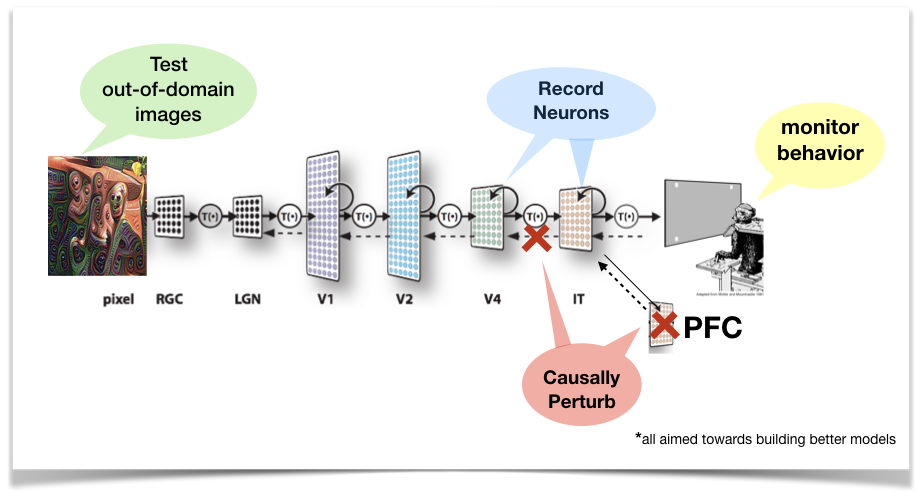

The recent progress in AI has led to the development of multiple high performing computational models that solve very similar tasks as humans, e.g., core object recognition. My current research approach is built under the premise that due to the shared behavioral goals of primates and such complex AI models, these class of models provide a formidable hypotheses space for testing neural representations and they contain the likely answers to many important questions in systems neuroscience. However, the neuroscience community often categorizes AI models of the brain as a “black-box” that is an overfit to the training data, too complicated, and too hard to comprehend — therefore not useful. In our latest study (Bashivan*, Kar* and DiCarlo, 2019, Science) we have demonstrated that, even though AI-based models of the brain are difficult for human minds to comprehend in detail, they embed knowledge of the visual brain function. With a series of closed loop neurophysiological recording and AI-based image synthesis experiments we have convincingly demonstrated that we can independently control both individual and a population of neurons in the macaque brain if we have a good neural network model of the same. The current models however are far from being perfect. So, in an attempt to study the shortcomings of such models, we compared the behavior of primates and off-the-shelf computer vision (CV) models. This led to the discovery of many images that are easy for primates but currently not solved by the CV models. Further investigation into the brain solutions for these images revealed evidence of feedback-related neural signals in the macaque inferior temporal cortex that are critical for solving these challenge images by the primates (Kar et al. 2019, Nature Neuroscience) currently unavailable in majority of the AI-based models. This study demonstrated how architectural limitations of feedforward AI systems can be exploited to gain insights into primate feedback computations. The results improve our understanding of the primate brain while providing valuable constraints for better brain-like AI. However, we do not yet know which brain circuits are most responsible for these additional, recurrent computations: circuits within the ventral stream? within IT? outside the ventral stream? all of the above? Following up on this, currently, I have developed various pharmacological and chemogenetic strategies to causally perturb specific neural circuits in the macaque brain during multiple object recognition tasks to further probe these questions and measure constraints for future models (both anatomically and functionally).

Speaker Bio

Kohitij Kar is a Postdoctoral Associate in the DiCarlo Lab at the McGovern Institute for Brain Research at MIT. He completed his Ph.D. in the Department of Behavioral and Neural Sciences at Rutgers University in New Jersey (PhD advisor: Bart Krekelberg). His current research work is primarily focussed towards using a variety of experimental and computational techniques to build better models of primate visual cognition.

Additional Info

Upcoming Cog Lunches:

- Tuesday, April 9 - Malinda McPherson

- Tuesday, April 16 - Daniel Czegel

- Tuesday, April 23 - Speaker TBA

- Tuesday, April 30 - Speaker TBA

- Tuesday, May 7 - Omar Costilla Reyes

- Tuesday, May 14 - Speaker TBA