Learning Concepts through Neural Logical Induction

Description

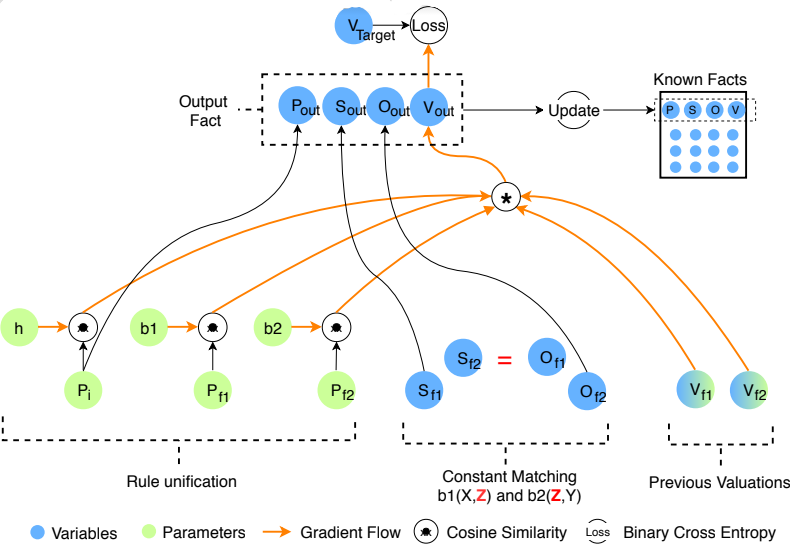

We present a framework that learns concepts that have symbolic and subsymbolic content, acquiring some of the advantages of both. In the context of semantic knowledge presented as a set of facts of the form Relation[Subject,Object] (Father[Alberto, Andres]) , a generative algorithm combines logical reasoning and neural networks to simultaneously learn the logical structure underlying the data (GRANDFATHER[X,Y]<-- FATHER[X,Z], PARENT[Z,Y]) and dense vector representations for the relations. We present recent and ongoing work as well as future directions.

Speaker Bio

I am interested in the interaction of symbolic probabilistic reasoning and sub-symbolic statistical learning in the attempt to understand and replicate higher level cognition in a human-cognitively meaningful way. To do so I explore models that combine structured generative frameworks like probabilistic programs, with deep learning. I care about things like compositionality and the origin of concepts.

Additional Info

Upcoming Cog Lunches

- October 9, 2018 - Matthias Hofer & Andrew Francl

- October 16, 2018 - Jenelle Feather & Maddie Pelz

- October 23, 2018 - Eli Pollock & Mika Braginsky

- October 30, 2018 - Peng Qian, Jon Gauthier, & Maxwell Nye

- November 13, 2018 - Anna Ivanova, Halie Olson, & Junyi Chu

- November 20, 2018 - Mark Saddler, Jarrod Hicks, & Heather Kosakowski

- November 27, 2018 - Tuan Le Mau

- December 4, 2018 - Daniel Czegel

- December 11, 2018 - Malinda McPherson